Our Human Value in the Age of AI

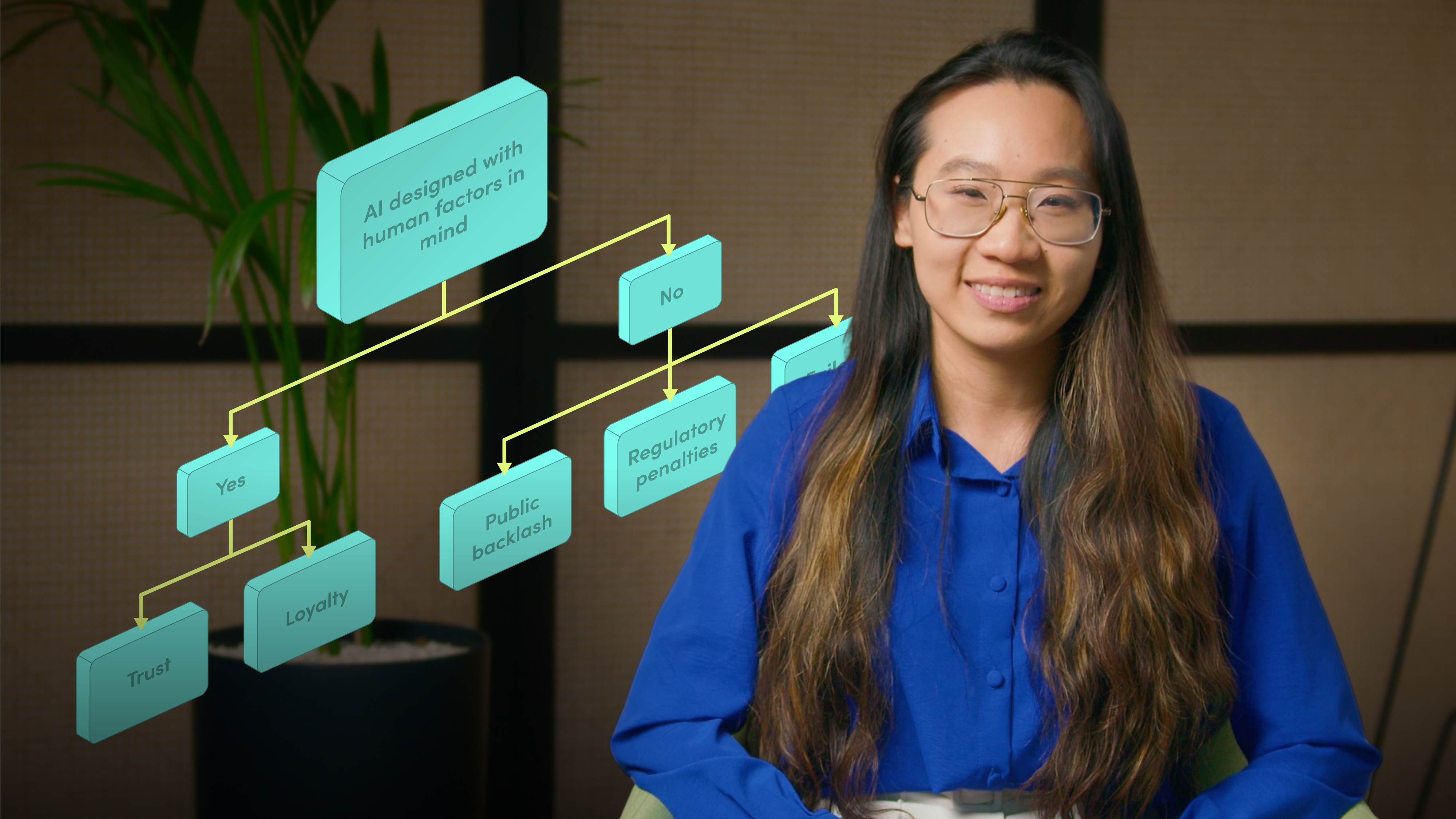

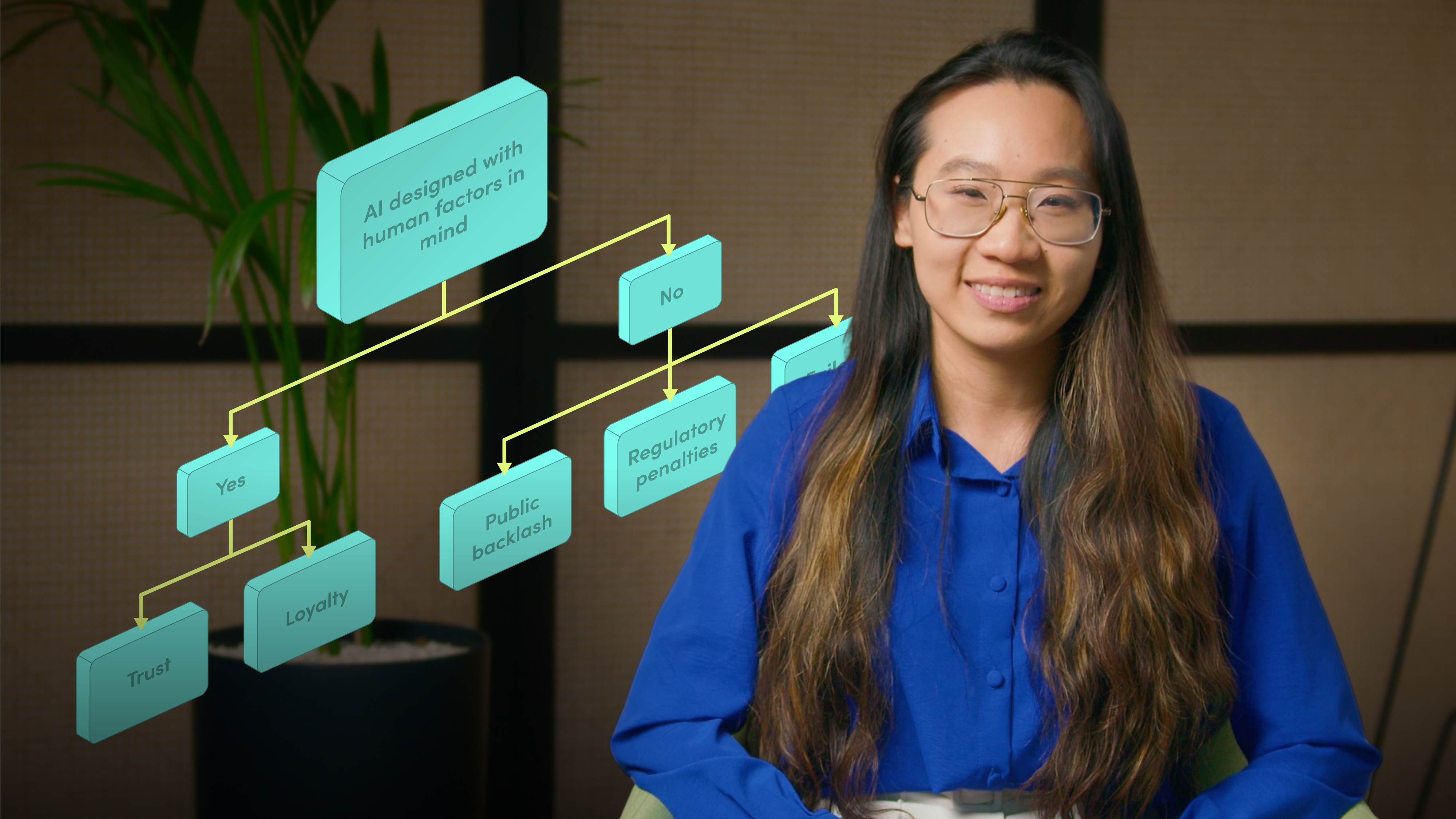

Emily Yang

Human-Centred AI (HCAI) Specialist

Discover how human-AI collaboration creates better outcomes than either alone. Join Emily Yang and learn how inclusive design, social impact metrics, and human oversight ensure AI strengthens human judgment, empathy, and trust across every stage of development.

Discover how human-AI collaboration creates better outcomes than either alone. Join Emily Yang and learn how inclusive design, social impact metrics, and human oversight ensure AI strengthens human judgment, empathy, and trust across every stage of development.

Subscribe to watch

Access this and all of the content on our platform by signing up for a 7-day free trial.

Our Human Value in the Age of AI

16 mins 59 secs

Key learning objectives:

Understand how human-AI collaboration outperforms either humans or machines alone

Understand the risks of excluding diverse stakeholders in AI development

Outline methods for measuring the social impact of AI systems

Outline practical principles to design AI that enhances human agency

Overview:

Subscribe to watch

Access this and all of the content on our platform by signing up for a 7-day free trial.

Subscribe to watch

Access this and all of the content on our platform by signing up for a 7-day free trial.

Emily Yang

There are no available Videos from "Emily Yang"