Beyond the fear of AI: The role for learning in the data-driven business

Henry White

Co-founder and CEO of xUnlocked

Bridging the AI confidence gap with the power of workforce learning

“Public company board members say the swift rise of AI in the workplace is an issue keeping them up at night.” This quote from a recent WSJ article rings true from many of the conversations we have had with those in leadership roles across business sectors.

Despite recognition of the enormous opportunities artificial intelligence (AI) presents to organisations of all sizes, fears around AI adoption focus on:

- The overwhelming and fast-growing number of AI tools available.

- The myriad of options for their adoption and use (and falling foul of related risks along the way).

- Employee resistance to using tools they may equate to job cuts.

- The pressure to deliver dramatic results across the business.

Delivering learning that all employees can access at the right technical level for their roles cuts through such anxieties. For leaders, it breaks down the information overload, helping to inform and define strategy, while managing boardroom expectations. And the right training delivered across the workforce drives a deeper, cultural shift, empowering employees in data literacy to understand and unlock AI’s potential across the broader business.

The future is now: The AI revolution

The race to adopt AI has been anything but slow off the starting blocks. Whether it’s Amazon using predictive analytics for ‘anticipatory shipping’, ING Bank using an AI chatbot to enhance self-service capabilities or Travago using ‘Smart AI Search’ to personalise accommodation booking, no industry has been immune to the allure of AI.

Accelerated uptake is reflected in research. For six years to 2023, for instance, AI adoption among over 800 organisations polled for McKinsey’s annual Global Report hovered at around 50%. In 2024, this jumped to 72%, with 75% predicting that AI would lead to ‘significant or disruptive change in their industries’.

The geopolitical context only reinforces the perception of AI as an inexorable force, with the US and China entangled in an ongoing ‘AI arms race’. At the end of January, China’s DeepSeek, an open-source AI model that seems to use fewer resources and yet still perform well against rival OpenAI’s ChatGPT, unsettled markets, wiping billions off US tech stocks (although they later rallied).

AI increasingly features in domestic politics too: Elon Musk’s expertise in AI seems integral to his scheme to slash US federal spending; while earlier in the year, the UK government launched an AI Opportunities Action Plan to transform public services.

Fear drivers

This scale of adoption, outweighed only by the expectation of what is yet to come, has understandably made some business leaders, and the wider workforce, nervous. At worst, when bundled into the unknowns surrounding any nascent technology, there arises a kind of cognitive distortion. Individuals and wider society can struggle to see beyond the negatives, and start catastrophising the potential impact of AI, preventing logical consideration of the issues at hand.

On a business level, decision making can slow to a crawl as leaders struggle to unravel the cultural and operational complexities AI seems to present. An instinctive response is to ‘wait and see’ or avoid AI altogether. Meanwhile, a minority of businesses forge ahead reshaping the landscape — and leaving the rest to catch up, or disappear altogether, in a world already changed.

The speed at which business can pull away from the competition is shown most recently with Cursor, an AI code editor. It broke records in 2024, becoming the youngest company to make $100m in Annual Recurring Revenue in just 12 months — with a team of fewer than 20 people. Other, older AI success stories include Spotify and Netflix, which have used AI to make personal recommendations, refining them based on real-time interactions to make the AI smarter over time.

But positive innovations are peppered with tales of AI failures. For all the enthusiasm of the UK government’s AI action plan, for instance, it went on to announce it had shut down or dropped at least half a dozen AI pilots intended for improving the welfare system, blaming ‘frustrations and false starts’ in ensuring AI systems are ‘scalable, reliable and thoroughly tested’. These stories can intensify the sense of risk, undermining the will to experiment, and stifling innovation.

From fear to risk-mitigation

Ignoring concerns is not the answer. For all that AI can offer in operational and cost efficiencies, improved decision making, and client/customer engagement, the limitations and risks of AI are real. But in keeping with the management principle of ‘what gets measured gets managed’, the risks need to be defined and understood. They include:

- Ethical risks around AI bias: Due to data that unintentionally leads to unfair or discriminatory outcomes, resulting in reputational damage .

- Security and privacy risks: AI systems handle vast amounts of sensitive data, increasing the risk of cyberattacks, data breaches, and misuse.

- Regulatory challenges: AI laws remain a work in progress, with global approaches differing between the US, China, the UK and EU. Global compliance becomes an uncertain and shifting target.

- Job displacement: Fears that AI will lead to swathes of job losses, causing employee resistance to AI adoption.

- AI hallucinations and inaccuracy: AI models can generate false or misleading information, leading to poor decision-making and reputational damage.

- Cost and complexity: AI projects can require significant upfront investment, and time and effort to work through implementation challenges, with uncertain ROI.

This exercise alone highlights risks that may in fact be familiar, and manageable. After all, we’ve faced disruptive technologies before. From mobile computing and smartphones, social media, e-commerce, media streaming, electric vehicles and smart devices — change has punctuated our lives in innumerable dramatic ways since the turn of the Millennium. And companies have evolved to absorb and embrace the impact.

Take Cloud computing, which first emerged in the mid-2000s. There were huge concerns about Cloud systems around data breaches, regulatory compliance, and governance. But industries learned to implement robust security measures such as end-to-end encryption, zero-trust architectures, and compliance automation to align to regulations including GDPR. Adopting hybrid cloud approaches and leveraging middleware solutions also meant that companies could overcome the complexity of migration from on-premise to cloud-based infrastructure.

Cloud computing, as a result, has fundamentally reshaped the modern workplace, freeing up employees who were tied to office networks and physical servers. Tools like Google Workspace, Microsoft 365, and Slack, have enabled real-time collaboration from anywhere and reduced dependency on office spaces.

Experience may even present potential solutions for challenges that seem altogether new and peculiar to AI. In our recent article AI Bias: The hidden risk you can’t ignore, our Chief Content Officer Prasad Gollakota explores ways for dealing with the risk that AI could produce unfair outcomes, or favour or disadvantage certain groups.

These include developing diverse and representative datasets, using Explainable AI (XAI) techniques to understand and interrogate how decisions are made, investing in ethical frameworks and governance structures, regularly auditing AI systems, employing diverse development teams, and implementing regular training for staff.

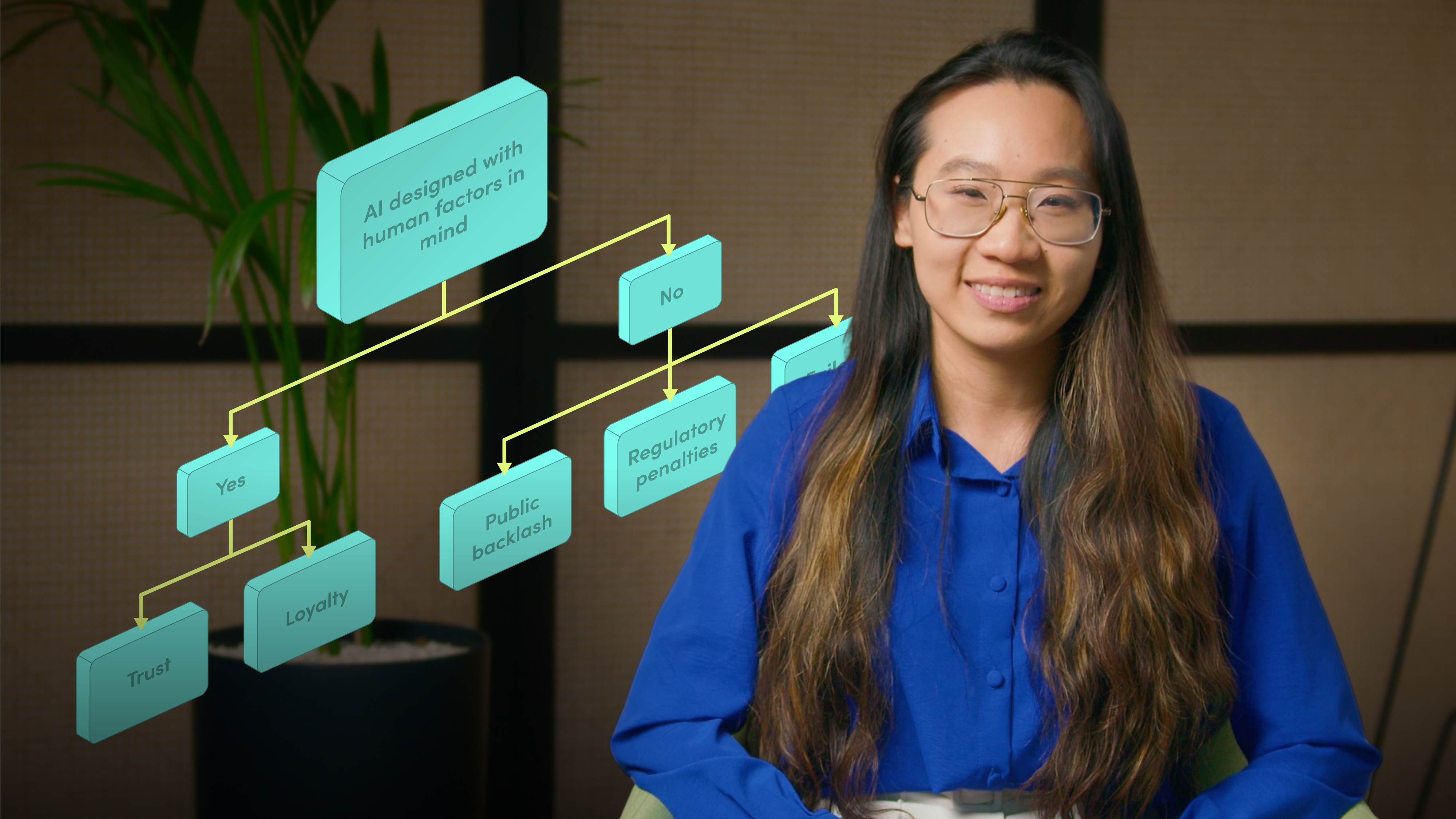

The people challenge

More difficult, however, may be the challenge of addressing the risks that are harder to see or articulate — ie, those arising from how people interact with data and AI. AI isn’t a back-office tool largely controlled by an expert, technical development team. AI is underpinned and enabled by extensive use of data, which only works when people interact with it, in practice. Employees at all levels of seniority need to be data literate, to leverage the tools, interpreting the outputs of AI data in ways that create opportunity but also recognise and limit risk.

Success requires businesses to invest in training employees to understand and use data and AI effectively. This can also help nurture buy-in, shifting the cultural mindset to the opportunities of a data-enabled future, in which everyone knows how to make the most of the efficiencies AI can offer. This level of firm-wide data literacy reduces the likelihood of internal resistance, in which groups of employees become hostile to AI advances, and risks proliferate.

Findings from PwC’s 28th Annual Global CEO Survey, for example, showthat CEOs are already seeing promising results in the use of GenAI, with one-third saying it has increased revenue and profitability over the past year, while half expect their investments in the technology to increase profits in the year ahead.

But it also finds fewer than a third (31%) are systematically integrating AI into workforce and skills. “This could be a misstep,” states the report. “Realising the potential of GenAI will depend on employees knowing when and how to use AI tools in their work — and understanding the potential pitfalls.”

Defining success

When employees are successfully brought into the process, the outcomes can be profound. Take the example of an AI project at a large US healthcare insurance company, recently detailed in the Harvard Business Review.

The company introduced AI to improve the indexing of claims, which involved a vast and repetitive data-entry process, and an extremely high degree of error. The new AI approach required considerable upfront time and effort to choose the right mix of tools that were not

prohibitively expensive, and it was described as “more intensive and complex than other IT implementation projects”.

But the outcome was improved productivity by a factor of three, and considerably enhanced accuracy, with only 2.7% of the documents still needing to go to human indexers to be reworked, compared to nearly 90% before the introduction of AI.

Essential factors for success were cited as “understanding the business context” and “working with employees on the ground”. In fact, the company reported very little internal resistance once employees could see how AI would improve their jobs. Headcount has remained much the same as before the AI implementation — but roles have evolved to focus on more interesting, higher value work.

The learning journey: Introducing Data Unlocked

In light of this fast shifting market, and the need to involve employees in the use of data and AI, we recently unveiled our Data Unlocked platform, designed to support data and AI literacy for enterprises. At our launch event, we were struck by the many conversations focused on the need for concerted action to leverage the opportunities of AI innovation — as well as mitigate its limitations.

AI and Digital Skills Strategist Glyn Townsend provided the keynote address, with a compelling insight into why today’s industrial revolution differs to anything that has gone before. Quite simply, people didn’t previously live long enough for their skills to become obsolete. Now we have several generations in the workforce at the same time — each with vastly different experiences and levels of data literacy. He made a powerful case for workforce reskilling to keep everyone aligned with the unprecedented pace of change.

This was a point also reinforced in our recent webinar, Building Workforce Capabilities for an AI-Proofed Business, which highlighted the importance of data-skills learning, while drilling down more deeply into what this means in practice. The discussion focused on how businesses can span the chasm between what an LLM is to how it is actually applied and used in a data-driven organisation. And it explored the ways in which employees can be upskilled to interact with data and AI day-to-day, ensuring AI informs rather than dictates decision making, thereby also mitigating risk.

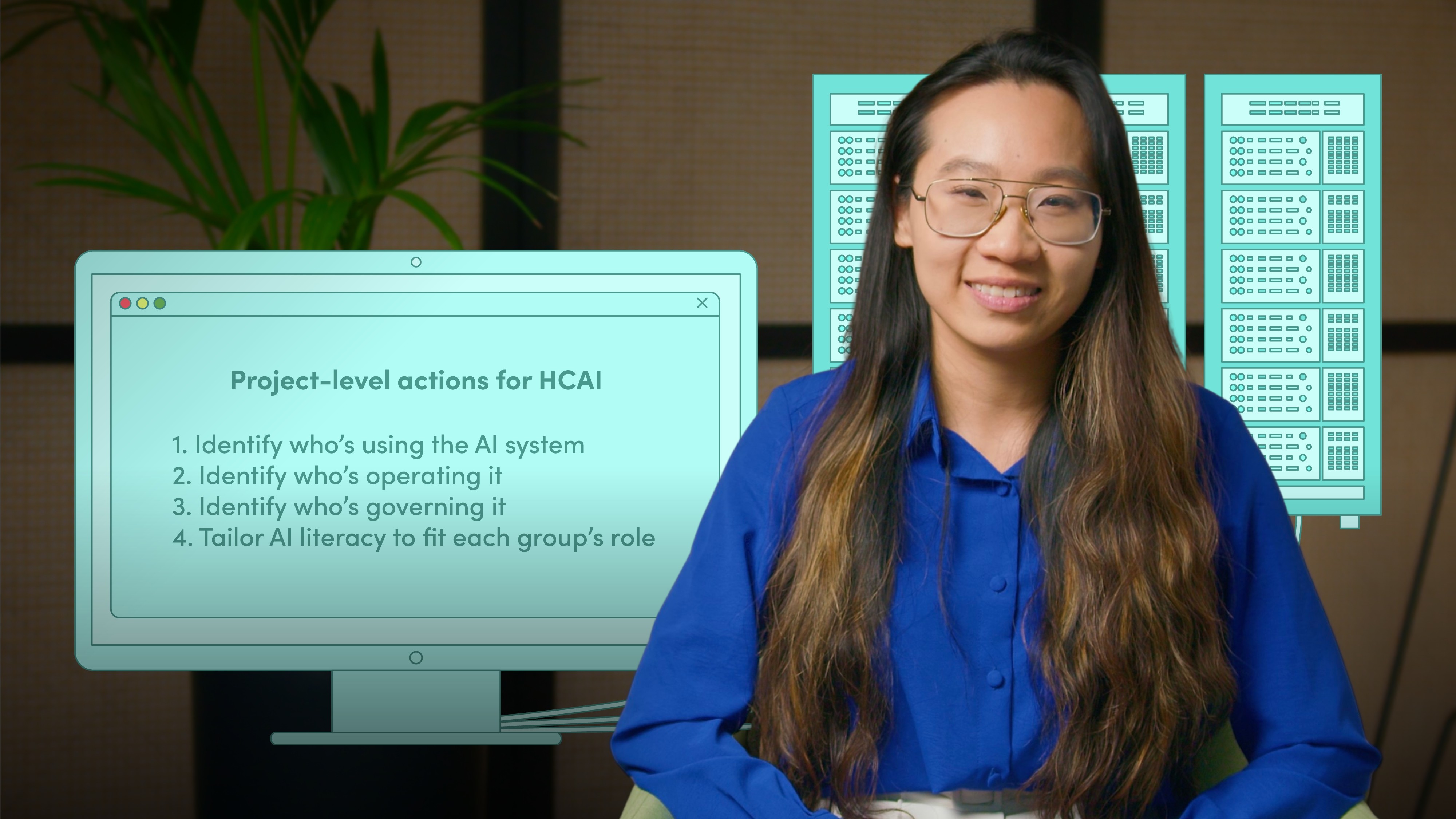

Data Unlocked puts these ideas into practice. The on-demand, expert-led learning platform offers a comprehensive approach to data and AI literacy, covering topics from foundational skills to more advanced data analytics — allowing people at all levels of the business to engage confidently with data and AI tools in their daily roles.

Highly practical and interactive videos, divided into clear pathways across the platform, help learners put data at the core of all decision making, while supporting the development of a data-driven culture essential for organisations adopting AI.

In one such video, ‘Implementing AI in your Organisation’, Data Scientist and Engineer Elizabeth Stanley takes learners through the AI project lifecycle, a structured process that includes ideation, data collection, model development, deployment, and ongoing feedback loops.

In another, the AI Foundations pathway, Maurice Ewing, Managing Director of ConquerX, and teacher of data analytics at Columbia University, breaks down AI’s strengths and limitations, exploring practical techniques like prompt engineering, managing AI’s technical constraints, and balancing human oversight with AI-driven insights.

Training of this calibre, applied across the workforce, meets the immediate need to equip the workforce with vital data and AI skills that can integrate into a business’s daily workflow, enhancing quality, productivity, and cost-efficiency. But it can do much more than that too — transforming unstructured fears into a clear roadmap for AI-enabled success.

To delve deeper into the importance of data and AI literacy, and how organisations can empower their teams to thrive in the data-centric age, read our report, Today solved: Data and AI skills that redefine tomorrow. Find out more and download the report now.

Henry White

Share "Beyond the fear of AI: The role for learning in the data-driven business" on

Latest Insights

Essential data skills: Mastering causation in data-driven decision making

11th August 2025 • Prasad Gollakota

Five inconvenient truths: Balancing AI and human skills for effective learning

9th May 2025 • Prasad Gollakota